Should AI researchers trust AI to vet their work?

There is an avalanche of research publishing in the field of machine learning, something Google engineer Cliff Young has likened to a "Moore's Law" of AI publishing, with the number of academic papers on the topic that are posted on the arXiv pre-print server doubling every 18 months.

All that creates problems for peer reviewing the work, what with experienced AI researchers too few to possibly read each and every paper carefully.

What if machines could do some of the heavy lifting? Should academics trust their own acceptance or rejection to an AI?

Also: Google says 'exponential' growth of AI is changing nature of compute

That's the intriguing question raised by a report posted Thursday, on arXiv, by a machine learning researcher at Virginia Tech, Jia-Bin Huang, titled "Deep Paper Gestalt."

Huang used a convolutional neural network, or CNN, the stock tool of machine learning for image recognition, to sift through over 5,000 papers submitted to academic conferences dating back to 2013. Huang writes that purely on the basis of the look of a paper — its mix of text and images — his neural net can select a "good" paper, one deserving of inclusion in a conference, with 92% accuracy.

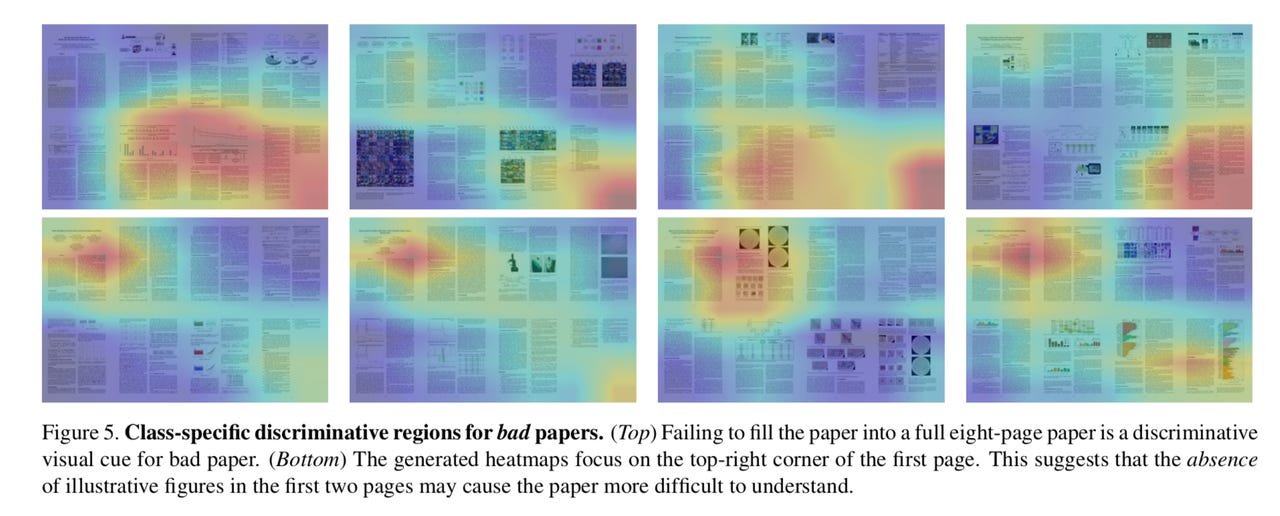

The big picture, for researchers, is that a couple things matter most in the look and feel of their document in terms of chances of getting accepted: Having colorful pictures on the front page of a research paper, and making sure to fill out all of the pages, not leaving a blank spot at the bottom of the last page.

Jia-Bin Huang's convolutional neural network digests thousands of winning and losing academic papers to create a "heat map" of the strengths and shortcomings. Biggest mistakes of losing papers: not having a colorful graphic on page one and not filling out the last page to the bottom.

Huang bases the work on a 2010 paper by Carven von Bearnensquash of the University of Phoenix. That paper used non-deep learning, traditional computer vision techniques to find ways of "merely glancing through the general layout" of a paper to pick winners and losers.

Also: Facebook AI researcher urges peers to step out into the real world

In that spirit, Huang fed the computer 5,618 papers in total that had been accepted to two top computer vision conferences, CVPR and ICCV, over the course of five years. Huang also gathered papers presented at conference "workshops," to serve as a proxy for rejected papers, since there is no actual access to rejected conference papers.

Huang trained the network to associate winning and losing samples with the binary classifier "good" and "bad," in order to tease out the "gestalt" of research papers. Gestalt means a form or shape that is greater than its constituent parts. It is what machine learning pioneer Terry Sejnowski has referred to as "globally organized perception," something more meaningful than the hills and valleys of the immediate terrain.

The trained network was then tested against a subset of the papers that it had never seen before. The training balanced false positives — papers that shouldn't be accepted but get accepted — against false negatives, papers that should be accepted but get rejected.

Also: Andrew Ng sees an eternal springtime for AI

By limiting the number of "good" papers rejected to just 0.4% — 4 papers in total — the network was able to accurately reject half the "bad" papers that should be rejected.

The author was even good enough to submit the paper itself to its own network. The result? Failure: "We apply the trained classifier to this paper. Our network ruthlessly predicts with high probability (over 97%) that this paper should be rejected without peer review."

Must read

- 'AI is very, very stupid,' says Google's AI leader (CNET)

- Baidu creates Kunlun silicon for AI

- Unified Google AI division a clear signal of AI's future (TechRepublic)

As far as those cosmetic requirements — nice colorful pictures up-front — Huang doesn't just describe the results. The researcher also offers up a separate piece of code that will create good papers, visually at least. It feeds the good papers in the training database into a "generative adversarial network," or GAN, which can create new layouts by learning from examples.

Huang also offers up a third component, which "translates" a failing paper into the form of a successful one, by "automatically suggesting several changes for the input paper" such as "adding the teaser figure, adding figures at the last page."

Huang suggests that vetting papers in this way can be a "pre-filter" that can ease the burden of human reviewers, as it can make a first-pass through thousands of papers in seconds. Still, "it is unlikely that the classifier will ever be used in an actual conference," the author concedes.

One of the limitations of the work that might hamper its use is that even if the look of a paper, its visual gestalt, correlates with historical results, that doesn't necessarily mean such a correlation is finding the deeper worth of papers.

As Huang writes, "Ignoring the actual paper contents may wrongly reject papers with good materials but bad visual layout or accept crappy papers with good layout."

Scary smart tech: 9 real times AI has given us the creeps

Previous and related coverage:

What is AI? Everything you need to know

An executive guide to artificial intelligence, from machine learning and general AI to neural networks.

What is deep learning? Everything you need to know

The lowdown on deep learning: from how it relates to the wider field of machine learning through to how to get started with it.

What is machine learning? Everything you need to know

This guide explains what machine learning is, how it is related to artificial intelligence, how it works and why it matters.

What is cloud computing? Everything you need to know about

An introduction to cloud computing right from the basics up to IaaS and PaaS, hybrid, public, and private cloud.