How work devices may evolve to keep up in the AI era

The emergence of the neural processing unit (NPU) may be heralded as a game changer for personal computers (PCs), but finding the right balance in product design remains critical to deliver what consumers want.

Growing workplace demand for artificial intelligence (AI) tools, including generative AI (GenAI), has prompted industry players such as chipmakers and device manufacturers to ensure their products can support this shift.

Also: What is an AI PC? (And should you buy one?)

In particular, PCs will need to keep up as companies move AI workloads from the cloud to client devices. This shift can improve performance by removing the need to send AI workloads to the cloud and back to the user's device. Companies could also bolster data privacy and security by retaining data on the device, rather than moving it to and from the cloud.

Because AI tasks that run locally on a PC are typically executed by the CPU (central processing unit), GPU (graphics processing unit), or both, the device's performance and battery life can degrade, as neither are optimized for processing AI tasks. That's where AI-specific chips, or NPUs, come in.

User requirements remain focused on hybrid work

During the global pandemic, user requirements revolved around the ability to work remotely and support a geographically-dispersed workforce. This drove improvements in built-in camera and videoconferencing capabilities, according to Tom Butler, Lenovo's executive director of commercial portfolio and product management, who heads the hardware maker's commercial notebook portfolio.

This pushed Lenovo to focus its efforts on enhancing camera and video quality as well as building microphones that support noise segmentation. Users also wanted good laptop performance, including long battery life, Butler told ZDNET.

Also: Nvidia makes the case for the AI PC at CES 2024

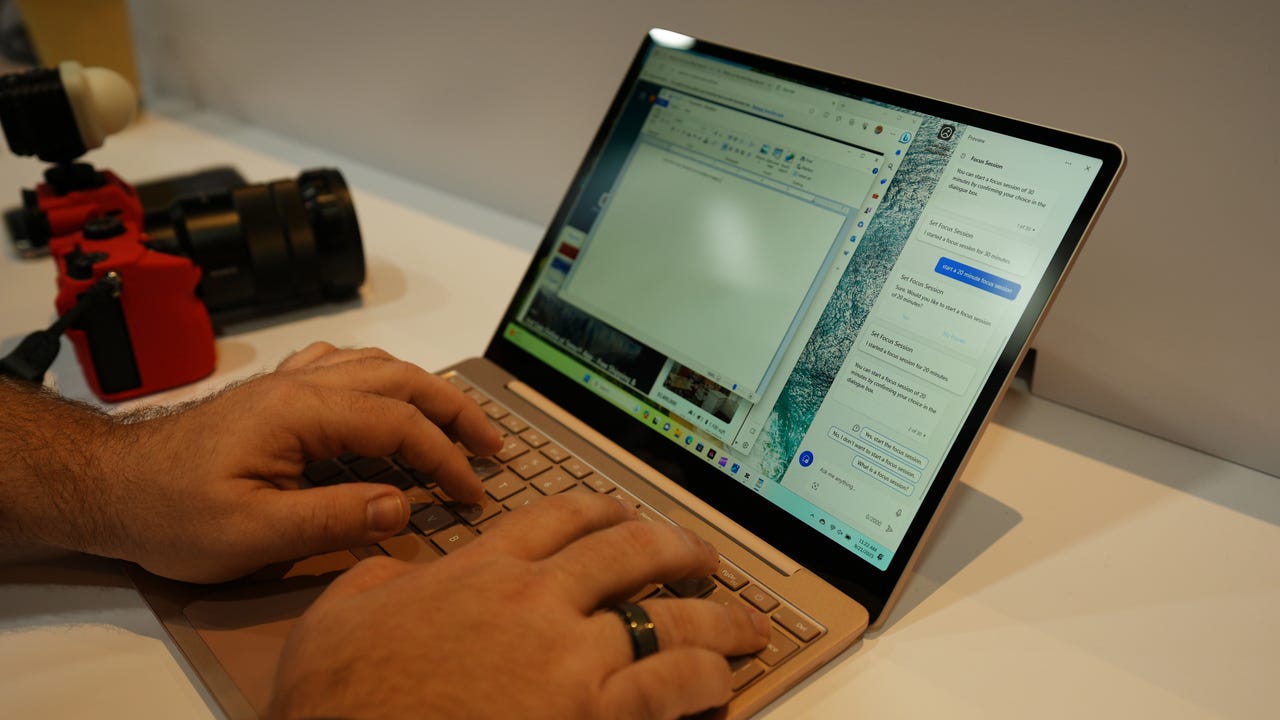

With workforces around the world still largely remote or hybrid, user requirements have remained focused on performance, battery life, and videoconferencing capabilities. The rise of AI PCs has pushed market players to look at offloading some workloads, such as noise-cancelling and background blurring functions, to NPUs.

Finding the optimal way to distribute workloads is a key objective now that PCs have three core engines on board, Butler noted, adding that all of Lenovo's new commercial laptops ship with NPUs. "We're effectively moving into the AI PC era," he said.

In her keynote speech at SXSW this week, AMD CEO Lisa Su also described AI as an "evolution in the PC game."

"It's the beginning of allowing us to become much more productive," Su said, adding that the AI PC era is already here, with products in the market today. She also said that AMD is working to optimize its chips for AI, including building "better software" for productivity.

Also: For the age of the AI PC, here comes a new test of speed

2024 is the year customers will start asking if their device is AI-capable, Asus COO Joe Hsieh said in an interview with ZDNET.

Like Lenovo, Asus is working to ensure its new products have NPUs or a core chip component for AI workloads.

Asus is also focused on developing the necessary software engine and tools to help users train their own AI models, Hsieh said, noting that most large language models are currently only trained on public data. With personal devices handling AI workloads, Asus believes users will want these applications to use their data rather than public data.

Optimizing hardware and software for AI

Asked what requirements are toughest to balance as demand for AI PCs grows, Butler pointed to the usual tradeoffs between the desire for thinner and lighter devices, alongside longer battery life and better performance.

Boosting performance will inevitably impact battery longevity and vice versa, he said. "With NPUs [now available], though, it allows us to offload some of the workloads that traditionally will tax either the GPU or CPU," he noted. Noise-cancelling capabilities, for instance, can be moved to the NPU. Butler noted that software vendors are looking to see how they can optimize their code to take advantage of NPUs.

Meanwhile, Asus wants to provide tools to help developers choose the right compute resources, according to Albert Chang, Asus' vice president and co-head of the AIoT business group. Application developers should be able to determine whether the CPU, NPU, or integrated GPU needs to power their AI tool, Chang said.

The coming wave of AI PCs

Forrester predicts that 60% of businesses will offer GenAI-powered applications to serve employees and customers in 2024.

Research firms are also anticipating that AI will boost demand for PCs. IDC projects that shipments of AI PCs will climb from 50 million units in 2024 to more than 167 million in 2027, when they will account for almost 60% of overall PC shipments. IDC defines AI PCs as personal computers with system-on-a-chip capabilities built to run GenAI tasks on the device.

Also: Nvidia's new AI chatbot runs locally on your PC, and it's free

IDC also projects 265.4 million PCs will ship worldwide in 2024, up 2% from 2023 and fueled by the introduction of AI PCs. These systems will push shipments to 292.2 million units in 2028, clocking a 2.4% compound annual growth rate between 2024 and 2028. "The presence of on-device AI capabilities is not likely to lead to an increase in the PC installed base, but it will certainly lead to a growth in average selling prices," said Jitesh Ubrani, IDC's research manager for worldwide mobile and consumer device trackers.

Gartner predicts 54.5 million AI PCs will ship by year-end, while the number of GenAI smartphones will reach 240 million. These AI devices will account for 22% of all PCs and 22% of basic and premium smartphones in 2024. The research firm defines AI PCs as those with dedicated AI accelerators or cores, NPUs, accelerated processing units (APUs) or tensor processing units (TPUs), designed to optimize and accelerate AI tasks on the device.

Also: Microsoft confirms next Windows, Surface, and AI event. Here's what to expect

IDC categorizes AI PCs into three groups. The first has PCs with NPUs that clock up to 40 tera operations per second (TOPS) and enable specific AI features in applications running locally on the device. AMD, Intel, Apple, and Qualcomm are among chipmakers that ship such NPUs today.

The second category comprises next-generation AI PCs equipped with NPUs that offer between 40 and 60 TOPS and have an "AI-first" OS that supports "persistent and pervasive" AI capabilities, IDC said. AMD, Intel, and Qualcomm are expected to begin releasing these chips in 2024, while Microsoft is anticipated to unveil updates to Windows 11 to leverage these NPUs.

The final category of advanced AI PCs offer more than 60 TOPS of NPU performance and currently are not in any vendor's announced product pipeline.